Shift Mainframe Data and Batch Processing to Hadoop

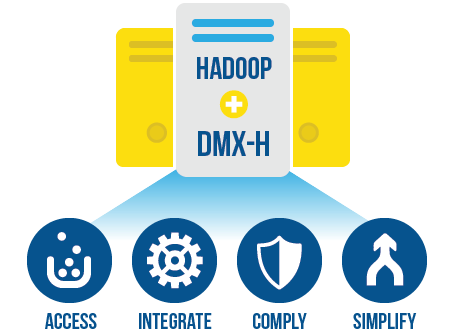

Precisely’s Mainframe Access & Integration for Hadoop Solution brings together decades of mainframe expertise with state-of-the-art Hadoop capabilities to provide a painless and seamless approach to offload mainframe data and workloads:

- Access – get mainframe data into Hadoop and Apache Spark – in a mainframe format – and work with it like any other data source!

- Integrate – blend mainframe, legacy and Big Data sources for better business insights

- Comply – certified with critical Hadoop ecosystem projects for security and governance

- Simplify – take advantage of common skill sets already in your organisation, no need to hire or train new developers

Fast-Track Data Preparation & Development

Both mainframe and Hadoop skills are in high demand – bridge the gap and reduce costly, time-consuming data collection and preparation tasks:

- Replace complex manual code (COBOL, MapReduce, Spark) with a powerful, easy-to-use graphical development environment

- Built-in support for COBOL copybooks and mainframe record formats including VSAM, fixed, variable, packed decimal, EBCDIC and more – without coding

- Native LDAP and Kerberos authentication support, integration with Apache Sentry and Apache Ranger, plus FTPS and Connect:Direct mainframe data transfer

- Efficiently copy mainframe data to Hadoop or Apache Spark, while preserving its native format for compliance – no need for staging translated copybooks

Leave a comment